1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

| import urllib

import requests

import os

import sys

import time

from bs4 import BeautifulSoup

import cv2

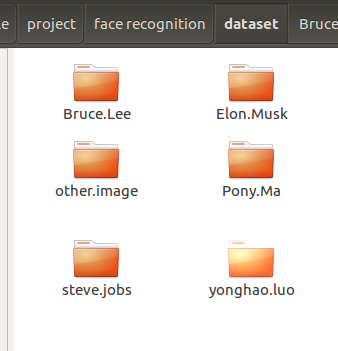

save_path_S = '../dataset/yonghao.luo/'

search_keyword_S = '罗永浩'

class CrawlersExtractiveImage:

def __init__(self, save_path, search_keyword):

self.save_path = save_path

self.search_keyword = urllib.parse.quote(search_keyword)

self.save_people_name = self.save_path.split('/')[-2]

self.rectanglecolor = (0, 255, 0)

print("web spider for same of people's picture!", end='\r')

def SaveImage(self, image_link, image_cout):

'''

:param image_link: 将要存储的图片链接

:param search_keyword: 图片的关名字

:param image_name: 图片的id编号

:return:

'''

try:

urllib.request.urlretrieve(image_link, self.save_path + self.save_people_name + str(image_cout) + '.jpg')

time.sleep(1)

except Exception:

time.sleep(1)

print("产生未知错误,放弃保存", end='\r')

else:

print("图+1,已有" + str(image_cout) + "张图", end='\r')

def Save_image_2(self, image_link, image_cout):

s = requests.session()

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3',

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.120 Safari/537.36'

}

s.headers.update(headers)

html = s.get(url=image_link, timeout=20)

path = self.save_path + self.save_people_name + str(image_cout) + '-.' + image_link.split('.')[-1]

with open(path, 'wb') as file:

file.write(html.content)

file.close()

print("文件保存成功", '\n', '\n', end='\r')

time.sleep(3)

def FindLink(self, PageNum):

for i in range(3, PageNum):

num_disp_k = 0

if True:

url = 'http://cn.bing.com/images/async?q={0}&first={1}&count=35&relp=35&scenario=ImageBasicHover&datsrc=N_I&layout=RowBased_Landscape&mmasync=1&dgState=x*188_y*1308_h*176_c*1_i*106_r*24'

agent = {

'User-Agent': "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.120 Safari/537.36"

}

page1 = urllib.request.Request(url.format(self.search_keyword, i * 35 + 1), headers=agent)

page = urllib.request.urlopen(page1)

soup = BeautifulSoup(page.read(), 'html.parser')

if not os.path.exists(self.save_path):

os.mkdir(self.save_path)

for StepOne in soup.select('.iusc'):

link = eval(StepOne.attrs['m'])['murl']

num_disp_k = len(os.listdir(self.save_path)) + 1

self.get_head_image(link, num_disp_k)

print("输出第 %d 轮:%d 张图片" %(i, num_disp_k), '\n', self.search_keyword, '\t', num_disp_k, link, end='\n')

def get_head_image(self, urlimage, num_disp_k):

cap = cv2.VideoCapture(urlimage)

ok, origin_image = cap.read()

if ok:

path = '/home/porter/opencv-3.4.3/data/haarcascades/'

classfier = cv2.CascadeClassifier(path + "haarcascade_frontalface_alt2.xml")

grey = cv2.cvtColor(origin_image, cv2.COLOR_BGR2GRAY)

faceRects = classfier.detectMultiScale(grey, scaleFactor=1.2, minNeighbors=3, minSize=(32, 32))

if len(faceRects) > 0:

for faceRect in faceRects:

x, y, w, h = faceRect

img_name = '%s/%d.jpg' % (self.save_path, num_disp_k)

image = origin_image[y - 10: y + h + 10, x - 10: x + w + 10]

cv2.imwrite(img_name, image)

time.sleep(0.2)

cap.release()

cv2.destroyAllWindows()

else:

print("urlimage错误:", urlimage)

if __name__=='__main__':

PageNum = 15

app = CrawlersExtractiveImage(save_path_S, search_keyword_S)

app.FindLink(PageNum)

|