本文主要记录Ubuntu下yolov4数据的训练

安装opencv4 安装opencv_contrib 样本标注 生成yolov4需要的数据集 配置GPU 修改yolov4网络配置参数 开始训练 默认前三步制作结束,本文主要从第四步生成YOLOV4所需要的数据开始

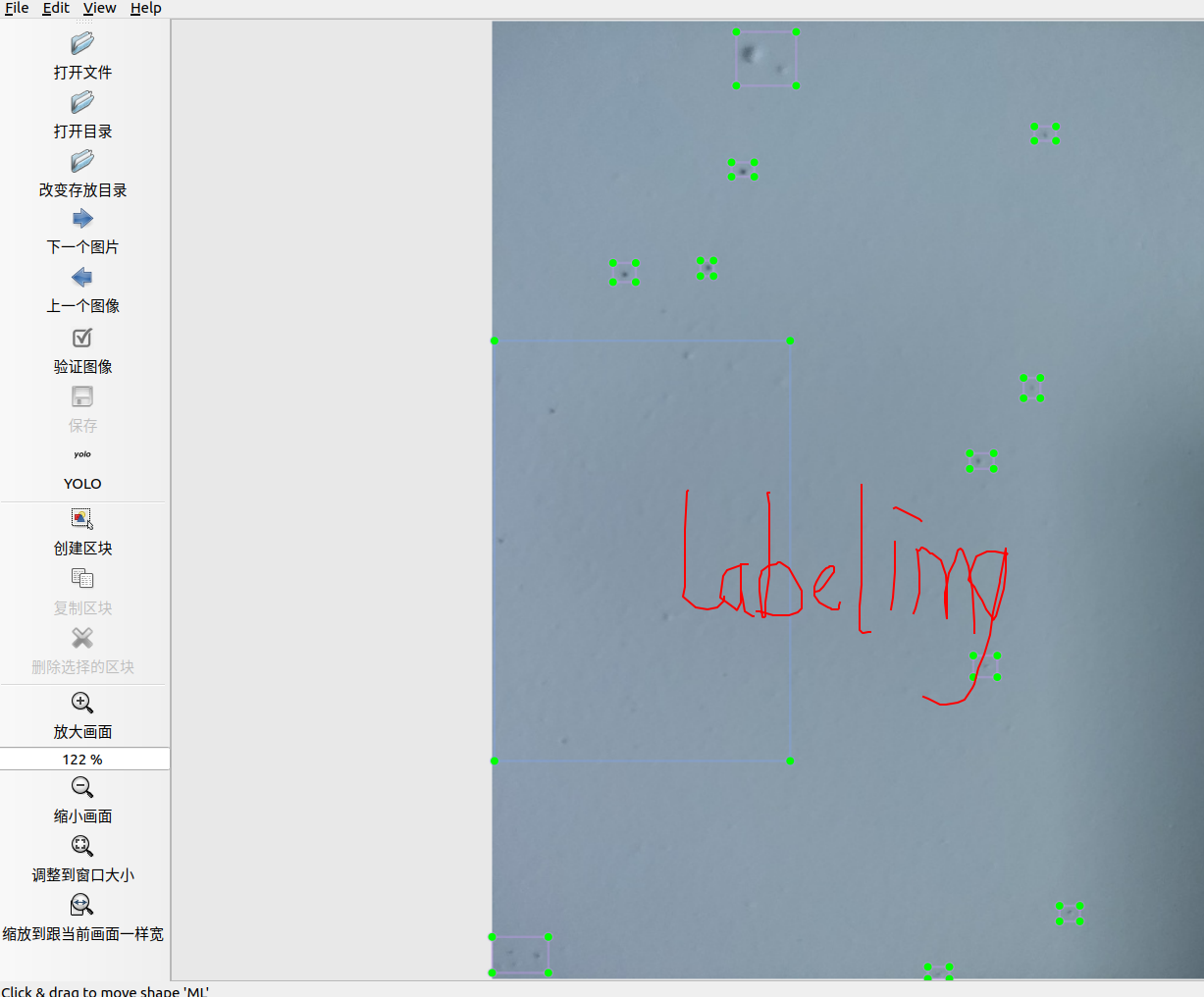

[x] Edit By Porter, 积水成渊,蛟龙生焉。 样本标注 使用labelimg标注工具得到yolo需要的txt格式的label标注文件,所以对于样本我们就有了原始图像数据集img文件和原始图片对应的标注文件label文件夹。如果是用pascolVOC我们可以通过xml转txt文件实现转换。公网IP购买

最终得到的:

接下来回顾下我们需要用到的训练命令

1 ./build_release/darknet detector train data/my_dataset.data cfg/yolov4-my_dataset.cfg backup/yolov4-my_dataset.weights -map

上面命令中的my_dataset.data 里面包含了训练样本图片的地址,训练样本的标签地址,内容如下:

1 2 3 4 5 6 $ cat cfg/porter.data classes= 8 train = /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/train.txt valid = /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/test.txt names = /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/data/porter.names backup = /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/backup/

如上所示,需要训练集(train.txt)、验证集(test.txt),需要分类的名字(porter.name ),需要模型备份地址(/back/)。所以接下来就是对样本进行训练集和测试集的划分。

我们需要制作成如下的训练/测试样本集的*.txt效果:

1 2 3 4 5 6 (base) porter@porter:~$ more home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/train.txt /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/VOCdevkit/VOC2007/JPEGImages/000113.jpg /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/VOCdevkit/VOC2007/JPEGImages/000244.jpg /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/VOCdevkit/VOC2007/JPEGImages/000222.jpg /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/VOCdevkit/VOC2007/JPEGImages/000250.jpg /home/porter/WorkSpace/YOLO4_test/YOLOV4/darknet/VOCdevkit/VOC2007/JPEGImages/000077.jpg

生成yolov4所需要的数据集,这个部分需要生成一个train.txt和2007_train.txt、test.txt和2007_test.txt。

这两个文件可以通过下面的python脚本实现,这里说下这两个文件的区别:

路径不同:train.txt位于**VOCdevkit/VOC2007/ImageSets/Main/文件夹中。2007_train.txt位于 VOCdevkit/**中。test文件也是同样的。 两者的作用不同:train.txt主要是随机生成的照片名字的种类(其实这个文件可以不需要的,知识VOC格式里包含的而已),而2007_train.txt主要是随机生成的包含样本集具体文件路径的样本(这个才是有用的) 两者文件夹的内容不同:train.txt主要是包含训练样本的图片名字。而2007_train.txt主要包含训练样本图片的绝对路径。 注意这个脚本应该放在YOLOV4/darknet/VOCdevkit/目录下运行,名字可以随便起

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 import osimport randomimport sys''' 本脚本实现 【yolo格式的样本图片对应的txt标注文件】转为训练需要的文件。 本脚本首先会在VOCdevkit/VOC2007/ImageSets/Main下生成: test.txt---测试样本集中随机分配的样本名字 train.txt---训练样本集中随机分配的样本名字 val.tat---验证集中随机分配的样本名字 trainval.txt---测试验证集中的样本名字 注意:本脚本应该放在:YOLOV4/darknet/VOCdevkit/目录下运行 运行命令为:python3 yolotxt_to_trian.txt.py ~/WorkSpace/YOLO4_test2/YOLOV4/darknet/VOCdevkit ''' if len (sys.argv) < 2 :- print ("no directory specified, please input target directory" ) exit() root_path = sys.argv[1 ] xmlfilepath = root_path + '/VOC2007/labels' txtsavepath = root_path + '/VOC2007/ImageSets/Main' if not os.path.exists(root_path): print ("cannot find such directory: " + root_path) exit() if not os.path.exists(txtsavepath): os.makedirs(txtsavepath) trainval_percent = 0.9 train_percent = 0.8 total_xml = os.listdir(xmlfilepath) num = len (total_xml) list = range (num)tv = int (num * trainval_percent) tr = int (tv * train_percent) trainval = random.sample(list , tv) train = random.sample(trainval, tr) print ("train and val size:" , tv)print ("train size:" , tr)ftrainval = open (txtsavepath + '/trainval.txt' , 'w' )/darknet$ cat cfg/porter.data classes= 8 train = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/darknet/VOCdevkit/2007_train.txt valid = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/darknet/VOCdevkit/2007_val.txt names = data/porter.names backup = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/darknet/backup/ ftest = open (txtsavepath + '/test.txt' , 'w' ) ftrain = open (txtsavepath + '/train.txt' , 'w' ) fval = open (txtsavepath + '/val.txt' , 'w' )- for i in list : name = total_xml[i][:-4 ] + '\n' if i in trainval: ftrainval.write(name) if i in train: ftrain.write(name) else : fval.write(name) else : ftest.write(name) ftrainval.close() ftrain.close() fval.close() ftest.close() import xml.etree.ElementTree as ETimport pickleimport osfrom os import listdir, getcwdfrom os.path import joinsets=[('2007' , 'train' ), ('2007' , 'val' ), ('2007' , 'test' )] wd = getcwd() for year, image_set in sets: image_ids = open ('./VOC%s/ImageSets/Main/%s.txt' %(year, image_set)).read().strip().split() list_file = open ('%s_%s.txt' %(year, image_set), 'w' ) for image_id in image_ids:YOLOV4/darknet/VOCdevkit/ list_file.write('%s/VOC%s/JPEGImages/%s.jpg\n' %(wd, year, image_id)) list_file.close() YOLOV4/darknet/VOCdevkit/ os.system("cat 2007_train.txt 2007_val.txt 2007_test.txt" )

1 python3 datahandle.py ~/WorkSpace/YOLO4_test2/YOLOV4/darknet/VOCdevkit/VOC2007

执行上面的程序后将会在VOCdevkit/VOC2007/ImageSets/Main文件夹产生对应的train.txt,trainval.txt, test.py和val.txt,以及在VOCdevkit/文件下生成三个2007_train.txt, 2007_test.txt, 2007_val.txt文件夹。

接下来配置yolo的训练配置文件:

/data/porter.names

1 2 3 4 5 6 7 8 9 /darknet$ cat data/porter.names LP LZ SC MH ML MD WR QT

以及

1 2 3 4 5 6 7 /darknet$ cat cfg/porter.data classes= 8 train = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/darknet/VOCdevkit/2007_train.txt valid = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/dYOLOV4/darknet/VOCdevkit/arknet/VOCdevkit/2007_val.txt names = data/porter.names backup = /home/porter/WorkSpace/YOLO4_test2/YOLOV4/darknet/backup/

我配置的makefile文件内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 GPU=1 CUDNN=1 CUDNN_HALF=0 OPENCV=1 AVX=0 OPENMP=0 LIBSO=1 ZED_CAMERA=0 ZED_CAMERA_v2_8=0 接下来就可以开始训练了 USE_CPP=0 DEBUG=0 ARCH= -gencode arch =compute_30,code=sm_30 \ -gencode arch =compute_35,code=sm_35 \ -gencode arch =compute_50,code=[sm_50,compute_50] \ -gencode arch =compute_52,code=[sm_52,compute_52] \ -gencode arch =compute_61,code=[sm_61,compute_61] OS := $(shell uname ) 接下来就可以开始训练了 VPATH=./src/ EXEC=darknet OBJDIR=./obj/ ifeq ($(LIBSO), 1) LIBNAMESO=libdarknet.so APPNAMESO=uselib endif html 字体颜色设置 ifeq ($(USE_CPP), 1) CC=g++ else CC=gcc endif CPP=g++ -std=c++11 NVCC=nvcc OPTS=-Ofast LDFLAGS= -lm -pthread COMMON= -Iinclude/ -I3rdparty/stb/include CFLAGS=-Wall -Wfatal-errors -Wno-unused-result -Wno-unknown-pragmas -fPIC ifeq ($(DEBUG), 1) 接下来就可以开始训练了= -Og -g COMMON+= -DDEBUG CFLAGS+= -DDEBUG else ifeq ($(AVX), 1) CFLAGS+= -ffp-contract=fast -mavx -mavx2 -msse3 -msse4.1 -msse4.2 -msse4a endif endif CFLAGS+=$(OPTS) ifneq (,$(findstring MSYS_NT,$(OS))) LDFLAGS+=-lws2_32 endif ifeq ($(OPENCV), 1) COMMON+= -DOPENCV CFLAGS+= -DOPENCV LDFLAGS+= `pkg-config --libs opencv4 2> /dev/null || pkg-config --libs opencv` COMMON+= `pkg-config --cflags opencv4 2> /dev/null || pkg-config --cflags opencv` endif ifeq ($(OPENMP), 1) ifeq ($(OS),Darwin) CFLAGS+= -Xpreprocessor -fopenmp else CFLAGS+= -fopenmp endif LDFLAGS+= -lgomp endif ifeq ($(GPU), 1) COMM 接下来就可以开始训练了ON+= -DGPU -I/usr/local/cuda/include/ CFLAGS+= -DGPU ifeq ($(OS),Darwin) LDFLAGS+= -L/usr/local/cuda/lib -lcuda -lcudart -lcublas -lcurand else LDFLAGS+= -L/usr/local/cuda/lib64 -lcuda -lcudart -lcublas -lcurand endif endif ifeq ($(CUDNN), 1) COMMON+= -DCUDNN ifeq ($(OS),Darwin) CFLAGS+= -DCUDNN -I/usr/local/cuda/include LDFLAGS+= -L/usr/local/cuda/lib -lcudnn else CFLAG 接下来就可以开始训练了S+= -DCUDNN -I/usr/local/cudnn/include LDFLAGS+= -L/usr/local/cudnn/lib64 -lcudnn endif endif ifeq ($(CUDNN_HALF), 1) COMMON+= -DCUDNN_HALF CFLAGS+= -DCUDNN_HALF ARCH+= -gencode arch =compute_70,code=[sm_70,compute_70] endif ifeq ($(ZED_CAMERA), 1) CFLAGS+= -DZED_STEREO -I/usr/local/zed/include ifeq ($(ZED_CAMERA_v2_8), 1) LDFLA 接下来就可以开始训练了GS+= -L/usr/local/zed/lib -lsl_core -lsl_input -lsl_zed else LDFLAGS+= -L/usr/local/zed/lib -lsl_zed endif endif OBJ=image_opencv.o http_stream.o gemm.o utils.o dark_cuda.o convolutional_layer.o list.o image.o activations.o im2col.o col2im.o blas.o crop_layer.o dropout_layer.o maxpool_layer.o softmax_layer.o data.o matrix.o network.o connected_layer.o cost_layer.o parser.o option_list.o darknet.o detection_layer.o captcha.o route_layer.o writing.o box.o nightmare.o normalization_layer.o avgpool_layer.o coco.o dice.o yolo.o detector.o layer.o compare.o classifier.o local_layer.o swag.o shortcut_layer.o activation_layer.o rnn_layer.o gru_layer.o rnn.o rnn_vid.o crnn_layer.o demo.o tag.o cifar.o go.o batchnorm_layer.o art.o region_layer.o reorg_ 接下来就可以开始训练了layer.o reorg_old_layer.o super.o voxel.o tree.o yolo_layer.o gaussian_yolo_layer.o upsample_layer.o lstm_layer.o conv_lstm_layer.o scale_channels_layer.o sam_layer.o ifeq ($(GPU), 1) LDFLAGS+= -lstdc++ OBJ+=convolutional_kernels.o activation_kernels.o im2col_kernels.o col2im_kernels.o blas_kernels.o crop_layer_kernels.o dropout_layer_kernels.o maxpool_layer_kernels.o network_kernels.o avgpool_layer_kernels.o endif OBJS = $(addprefix $(OBJDIR), $(OBJ)) DEPS = $(wildcard src/*.h) Makefile include/darknet.h all: $(OBJDIR) backup results setchmod $(EXEC) $(LIBNAMESO) $(APPNAMESO) ifeq ($( 接下来就可以开始训练了LIBSO), 1) CFLAGS+= -fPIC $(LIBNAMESO): $(OBJDIR) $(OBJS) include/yolo_v2_class.hpp src/yolo_v2_class.cpp $(CPP) -shared -std=c++11 -fvisibility=hidden -DLIB_EXPORTS $(COMMON) $(CFLAGS) $(OBJS) src/yolo_v2_class.cpp -o $@ $(LDFLAGS) $(APPNAMESO): $(LIBNAMESO) include/yolo_v2_class.hpp src/yolo_console_dll.cpp $(CPP) -std=c++11 $(COMMON) $(CFLAGS) -o $@ src/yolo_console_dll.cpp $(LDFLAGS) -L ./ -l:$(LIBNAMESO) endif $(EXEC): $(OBJS) $(CPP) -std=c++11 $(COMMON) $(CFLAGS) $^ -o $@ $(LDFLAGS) 接下来就可以开始训练了 $(OBJDIR)%.o: %.c $(DEPS) $(CC) $(COMMON) $(CFLAGS) -c $< -o $@ $(OBJDIR)%.o: %.cpp $(DEPS) $(CPP) -std=c++11 $(COMMON) $(CFLAGS) -c $< -o $@ $(OBJDIR)%.o: %.cu $(DEPS) $(NVCC) $(ARCH) $(COMMON) --compiler-options "$(CFLAGS) " -c $< -o $@ $(OBJDIR): mkdir -p $(OBJDIR) backup: mkdir -p backup results: mkdir -p results setchmod: chmod +x *.shtest .PHONY: clean clean: rm -rf $(OBJS) $(EXEC) $(LIBNAMESO) $(APPNAMESO)

.cfg文件配置主要有三个地方,分别对应三个:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [net] batch=64 subdivisions=64 width=640 height=480 channels=3 momentum=0.949 decay=0.0005 angle=0 saturation = 1.5 exposure = 1.5 hue=.1 learning_rate=0.001 burn_in=1000 max_batches = 8000 policy=steps steps=6400,7200 scales=.1,.1

每个yolo layer 前的最后一个conv内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 L2: batch=64 L3: subdivisions=16 L7: width=608 L8: height=608 L968: classes=2 L1056: classes=2 L1144: classes=2 L961: filters=21 L1049: filters=21 L1137: filters=21

1 ./darknet detector train data/my_dataset.data cfg/yolov4-my_dataset.cfg backup/yolov4-my_dataset.weights -map

1 ./darknet detector test ./cfg/porter.data ./cfg/porter.cfg ./back/yolov4.weights